It’s easy to see, and pay attention to, only successful individuals and businesses, not the failures that fall by the wayside. This phenomenon is called survivorship bias, a cognitive error that occurs when we focus on the people or things that have “survived” a particular process while overlooking those that did not, which leads us to incorrect conclusions about a situation or phenomenon.

Table of Contents

The infamous example of the World War II Plane

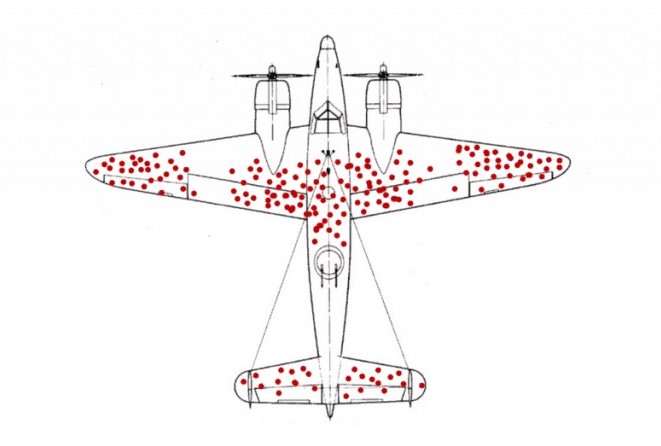

During World War II, the Allies were losing too many planes to enemy fire. Researchers proposed that more protective armour be added. However, only so much armour could be added to each plane before they became too heavy to fly. Specific parts of the plane had to be chosen to be armoured, with other parts left un-armoured.

Researchers analysed the locations of the bullet holes on all returning planes. They established that there were more bullet holes per square foot in the wings and the fuselage than in the engine and fuel system. They recommended that armour be added to these areas. These were the areas which had been, on average across the whole fleet, the most peppered by bullets. Logic followed that armour should be a attached to these areas.

A mathematician named Abraham Wald didn’t agree. He proposed that armour be attached to all the places where there were few or no bullet holes. Why?

The locations of the bullet holes in the planes that had come home safely had not brought the aircraft down. Wald’s alternative logic was that the areas in these returned planes where they found no holes must be the places where the planes that weren’t so fortunate took fire. This brilliantly simple observation completely changed the approach the military took towards armouring their planes, and no doubt saved countless lives. This is a classic example of survivorship bias, where the data set analysed is only taken from the success stories.

More examples of survivorship bias

Knowing how to respond to entrepreneurs who espouse the principles of Good to Great: Why Some Companies Make the Leap… and Others Don’t by Jim Collins, or In Search of Excellence: Lessons from America’s Best-Run Companies by Tom Peters, is difficult. Both books are fundamentally flawed. This article about How the Survivor Bias Distorts Reality explains:

In his 2001 book Good to Great (more than three million copies sold), Jim Collins culled 11 companies out of 1,435 whose stock beat the market average over a 40-year time span and then searched for shared characteristics among them that he believed accounted for their success. Instead, Collins should have started with a list of companies at the beginning of the test period and then used “plausible criteria to select eleven companies predicted to do better than the rest. These criteria must be applied in an objective way, without peeking at how the companies did over the next forty years. It is not fair or meaningful to predict which companies will do well after looking at which companies did well! [emphasis added] Those are not predictions, just history.” In fact, from 2001 through 2012 the stock of six of Collins’s 11 “great” companies did worse than the overall stock market, meaning that this system of post hoc analysis is fundamentally flawed.

This Freakonomics post From Good to Great … to Below Average unpacks the book further. One of the 11 companies Collins analysed was Fannie Mae, the US mortgage backed securities business that is only alive today because the Government bailed it out to the tune of $71 billion. If you had bought Fannie Mae stock around the time Good to Great was published, you would have lost over 80 percent of your initial investment. In Search of Excellence didn’t fare much better. The book identified eight common attributes of 43 “excellent” companies. Since then, 35 companies with publicly traded stocks, 20 have done worse than the market average.

Walter Isaacson is the author of Steve Jobs. In his attempt to unpack the genius of Jobs, Isaacson has also been criticised for falling victim to the fallacy in the way that he constructs the story.

Want to be the next Steve Jobs and create the next Apple Computer? Drop out of college and start a business with your buddies in the garage of your parents’ home. How many people have followed the Jobs model and failed? Who knows? No one writes books about them and their unsuccessful companies.

Warren Buffet on survivorship bias

Warren Buffet is arguably the greatest investor of all time. Now in his late eighties, he wrote a seminal paper on ‘value investing’ in 1984 entitled The Superinvestors of Graham-and-Doddsville. If you are interested in investing and achieving above average returns then you should give it a read. For the purposes of this post though, let’s focus on Buffet’s illustration of survivorship bias. He begins the paper with a wonderful short story:

Let’s assume we get 225 million Americans up tomorrow morning and we ask them all to wager a dollar. They go out in the morning at sunrise, and they all call the flip of a coin. If they call correctly, they win a dollar from those who called wrong. Each day the losers drop out, and on the subsequent day the stakes build as all previous winnings are put on the line. After ten flips on ten mornings, there will be approximately 220,000 people in the United States who have correctly called ten flips in a row. They each will have won a little over $1,000.

Now this group will probably start getting a little puffed up about this, human nature being what it is. They may try to be modest, but at cocktail parties they will occasionally admit to attractive members of the opposite sex what their technique is, and what marvelous insights they bring to the field of flipping.

Assuming that the winners are getting the appropriate rewards from the losers, in another ten days we will have 215 people who have successfully called their coin flips 20 times in a row and who, by this exercise, each have turned one dollar into a little over $1 million. $225 million would have been lost, $225 million would have been won.

By then, this group will really lose their heads. They will probably write books on “How I turned a Dollar into a Million in Twenty Days Working Thirty Seconds a Morning.” Worse yet, they’ll probably start jetting around the country attending seminars on efficient coin-flipping and tackling skeptical professors with, “If it can’t be done, why are there 215 of us?”

By then some business school professor will probably be rude enough to bring up the fact that if 225 million orangutans had engaged in a similar exercise, the results would be much the same — 215 egotistical orangutans with 20 straight winning flips.

This example illustrates why you won’t learn much from reading a book entitled “How I turned a Dollar into a Million in Twenty Days Working Thirty Seconds a Morning.” It’s worth avoiding any real life books or articles with similar titles. You might gain a few helpful tips, but you won’t gain much wisdom.

Nassim Taleb on survivorship bias

In The Black Swan: The Impact of the Highly Improbable Nassim Taleb raises the phenomenon of ‘alternative histories’ and the psychological ‘narrative fallacy’.

On alternative histories:

If we have heard of [history’s great generals and inventors], it is simply because they took considerable risks, along with thousands of others, and happened to win. They were intelligent, courageous, noble, had the highest possible obtainable culture in their day – but so did thousands of others who live in the musty footnotes of history.

On the narrative fallacy:

The narrative fallacy addresses our limited ability to look at sequences of facts without weaving an explanation into them, or, equivalently, forcing a logical link, an arrow of relationship, upon them. Explanations bind facts together. They make them all the more easily remembered; they help them make more sense. Where this propensity can go wrong is when it increases our impression of understanding.

In an earlier 2004 interview, Taleb explains that:

There is a silly book called A Millionaire Next Door, and one of the authors wrote an even sillier book called The Millionaire’s Mind. They interviewed a bunch of millionaires to figure out how these people got rich. Visibly they came up with bunch of traits. You need a little bit of intelligence, a lot of hard work, and a lot of risk-taking. And they derived that, hey, taking risk is good for you if you want to become a millionaire. What these people forgot to do is to go take a look at the less visible cemetery — in other words, bankrupt people, failures, people who went out of business — and look at their traits. They would have discovered that some of the same traits are shared by these people, like hard work and risk taking. This tells me that the unique trait that the millionaires had in common was mostly luck.

This bias makes us miscompute the odds and wrongly ascribe skills. If you funded 1,000,000 unemployed people endowed with no more than the ability to say “buy” or “sell”, odds are that you will break-even in the aggregate, minus transaction costs, but a few will hit the jackpot, simply because the base cohort is very large. It will be almost impossible not to have small Warren Buffets by luck alone. After the fact they will be very visible and will derive precise and well-sounding explanations about why they made it. It is difficult to argue with them; “nothing succeeds like success”. All these retrospective explanations are pervasive, but there are scientific methods to correct for the bias. This has not filtered through to the business world or the news media; researchers have evidence that professional fund managers are just no better than random and cost money to society (the total revenues from these transaction costs is in the hundreds of billion of dollars) but the public will remain convinced that “some” of these investors have skills.

How to avoid being duped by survivorship bias

It is common for us all, entrepreneurs included, to draw comparisons (often frustrated and unhealthy ones) with other apparently more successful individuals and businesses. If you find yourself in that situation, it’s wise to:

Recognise that what works for one person may not work for another. Entrepreneurs like Richard Branson deserve respect but their highly quotable anecdotes are seized upon by many entrepreneurs as guaranteed formulas for success. For every Richard Branson, there are one thousand wannabes who tried to copy him, failed, and lost their house in the process.

Be highly sceptical of individual gurus and books, podcasts or other resources that appear to give you the ‘answer’ or ‘secret formula’ for achieving wealth, success and happiness.

Recognise that luck plays a huge role in success, though the path is always obvious in hindsight. It is well recognised that successful people often fall foul of the narrative fallacy, overplaying the role that skill had in their success and underplaying the role of luck. For more on this read Howard Marks & Nassim Taleb on the role of luck & randomness.

Heed the advice of this Harvard Business Review article Stop Reading Lists of Things Successful People Do, which are “potentially harmful to decision makers, managers, and entrepreneurs.” What do the most successful people do before breakfast? They don’t read books about What the Most Successful People Do Before Breakfast!

Also heed the advice of David Mcraney, from the You Are Not So Smart blog:

If you spend your life only learning from survivors, buying books about successful people and poring over the history of companies that shook the planet, your knowledge of the world will be strongly biased and enormously incomplete. As best I can tell, here is the trick: When looking for advice, you should look for what not to do, for what is missing, but don’t expect to find it among the quotes and biographical records of people whose signals rose above the noise. They may have no idea how or if they lucked up.

I’m Richard Hughes-Jones.

I’m an Executive Coach to founders, CEOs and senior technology leaders in high-growth technology businesses, the investment industry and progressive corporates.

Having often already mastered the technical aspects of their craft, I help my clients navigate the complex adaptive challenges associated with executive-level leadership and growth.

I’m based in London and coach internationally. Find out more about my Executive Coaching services and get in touch if you’d like to explore working together.